Deep Learning Training

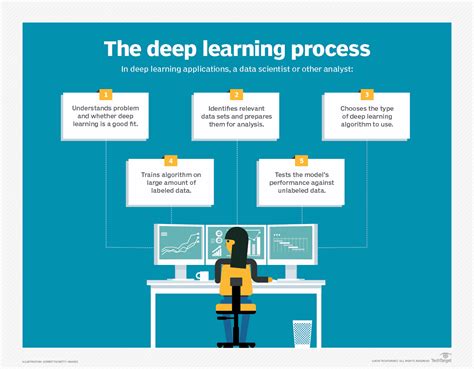

Deep learning, a subset of machine learning, has revolutionized various industries and continues to push the boundaries of artificial intelligence (AI). At its core, deep learning involves training artificial neural networks (ANNs) to learn and make intelligent decisions or predictions based on vast amounts of data. The training process is a complex and fascinating journey that transforms these neural networks into powerful tools with remarkable capabilities.

The Fundamentals of Deep Learning Training

Deep learning training is a meticulous process that involves feeding large datasets into ANNs and adjusting their internal parameters to optimize performance. This process is guided by an algorithm known as a backpropagation, which propagates errors backward through the network, allowing the system to learn and refine its internal representations.

The training phase is crucial as it determines the accuracy and effectiveness of the deep learning model. It is during this phase that the model learns to generalize from the training data and make accurate predictions on new, unseen data.

Key Components of Deep Learning Training

- Neural Network Architecture: The design of the neural network, including the number of layers, neurons, and connections, is a critical aspect of training. Different architectures are suited to different types of problems and datasets.

- Training Data: High-quality, diverse, and representative training data is essential. The model learns from this data, so its quantity and quality directly impact the model’s performance.

- Optimization Algorithms: These algorithms, such as gradient descent and its variants, are used to adjust the model’s parameters during training to minimize errors and improve performance.

- Loss Function: A loss function measures the discrepancy between the model’s predictions and the true values in the training data. It guides the optimization process by providing a quantitative measure of the model’s performance.

The Training Process: A Deep Dive

Deep learning training is an iterative process that typically involves the following steps:

1. Data Preparation

The first step is to collect and prepare the training data. This involves data cleaning, normalization, and potentially augmentation to ensure the data is diverse and representative of the problem domain.

For example, in image recognition tasks, data augmentation techniques like rotation, flipping, and scaling are often used to generate additional training samples, helping the model generalize better.

2. Network Initialization

Before training begins, the neural network’s parameters, such as weights and biases, are initialized. Various initialization methods exist, each with its own advantages and use cases. For instance, Xavier initialization is often used for deep networks to prevent vanishing or exploding gradients.

3. Forward Propagation

In this step, the training data is fed into the neural network, and the network computes its predictions. The output is then compared to the true labels in the training data.

For instance, in a simple binary classification task, the network might output a probability value between 0 and 1, indicating the likelihood of the input belonging to one of the two classes.

4. Backward Propagation (Backpropagation)

Backpropagation is a core algorithm in deep learning training. It calculates the gradients of the loss function with respect to the network’s parameters, propagating errors backward through the network. These gradients are then used to update the parameters in the next step.

5. Parameter Update

Using the gradients calculated in the backpropagation step, the optimization algorithm updates the network’s parameters. This process is repeated for a specified number of iterations or until the model’s performance converges or meets certain criteria.

6. Model Evaluation

During and after training, the model’s performance is evaluated on a separate validation dataset. This helps to ensure that the model is not overfitting to the training data and can generalize well to new, unseen data.

Common evaluation metrics include accuracy, precision, recall, F1 score, and area under the receiver operating characteristic curve (AUC-ROC) for classification tasks, and mean squared error, mean absolute error, and R-squared for regression tasks.

Advanced Training Techniques

Deep learning training is an active area of research, and several advanced techniques have emerged to improve the training process and model performance.

Transfer Learning

Transfer learning involves using a pre-trained model on a large dataset and fine-tuning it for a new, related task. This technique is particularly useful when the new task has limited training data, as it leverages the knowledge learned from the pre-trained model.

For example, a model pre-trained on ImageNet, a large-scale image database, can be fine-tuned for specific object detection tasks, significantly reducing the amount of new data required for training.

Regularization Techniques

Regularization techniques are used to prevent overfitting, which occurs when a model performs well on the training data but poorly on new data. Common regularization methods include dropout, which randomly drops neurons during training, and L1/L2 regularization, which adds a penalty term to the loss function based on the magnitude of the weights.

Batch Normalization

Batch normalization is a technique used to normalize the inputs to each layer of the neural network. It helps to stabilize and accelerate the training process by reducing internal covariate shift, which refers to the change in the distribution of network activations during training.

Data Augmentation

Data augmentation, as mentioned earlier, involves applying various transformations to the training data to create additional samples. This technique is particularly useful in domains where labeled data is scarce, such as medical imaging.

For instance, in medical imaging tasks, data augmentation might involve flipping, rotating, and scaling images to generate new training samples, helping the model learn more robust features.

Performance Analysis and Optimization

Analyzing and optimizing deep learning models is a critical aspect of the training process. It involves understanding the model’s performance, identifying areas for improvement, and making necessary adjustments.

Hyperparameter Tuning

Hyperparameters are configuration settings that are not learned from data but are set before training. They include the learning rate, batch size, number of epochs, and the architecture of the neural network. Tuning these hyperparameters can significantly impact the model’s performance.

Techniques like grid search, random search, and Bayesian optimization are often used to find the optimal hyperparameter values.

Early Stopping

Early stopping is a technique used to prevent overfitting by stopping the training process once the model’s performance on the validation set starts to deteriorate. This helps to ensure that the model learns the underlying patterns in the data without memorizing the training examples.

Model Pruning and Compression

Model pruning involves removing redundant or less important connections or neurons from the neural network to reduce its size and computational requirements. This can lead to faster inference times and smaller memory footprints.

Model compression techniques, such as quantization and knowledge distillation, are also used to reduce the model's size and complexity while maintaining its performance.

Future Implications and Challenges

Deep learning training has come a long way, but several challenges and opportunities lie ahead.

Ethical Considerations

As deep learning models become more powerful and pervasive, ethical considerations become increasingly important. Issues such as bias in training data, privacy concerns, and the potential impact of AI on society and employment need to be carefully addressed.

Explainable AI

While deep learning models can achieve remarkable performance, they are often considered “black boxes” due to the complexity of their internal workings. Developing techniques to make these models more interpretable and explainable is an active area of research, known as Explainable AI (XAI).

Energy Efficiency

Deep learning training often requires significant computational resources and energy. Developing more energy-efficient training algorithms and hardware is crucial to making deep learning more accessible and environmentally sustainable.

Continual Learning

Deep learning models typically require large amounts of data for training, but in many real-world scenarios, data is not always available in advance. Continual learning, also known as lifelong learning, is an area of research focused on developing models that can learn continuously from new data without forgetting previously learned tasks.

What is the main difference between deep learning and traditional machine learning algorithms?

+Deep learning algorithms, unlike traditional machine learning algorithms, use artificial neural networks with multiple layers (hence “deep”) to learn complex representations of data. This allows deep learning models to automatically learn hierarchical representations from raw data, making them particularly effective for tasks like image and speech recognition, natural language processing, and more.

How long does it typically take to train a deep learning model?

+The training time for a deep learning model can vary significantly depending on factors such as the complexity of the model, the size of the dataset, the computational resources available, and the optimization techniques used. Training times can range from minutes to days, or even weeks for very large models and datasets.

What are some common challenges in deep learning training, and how can they be addressed?

+Common challenges in deep learning training include overfitting, vanishing/exploding gradients, and the need for large amounts of labeled data. Overfitting can be addressed through techniques like regularization, dropout, and early stopping. Gradient issues can be mitigated using techniques like batch normalization and gradient clipping. To address the need for large datasets, techniques like data augmentation and transfer learning can be employed.